Transformers Almost From Scratch

Table of Contents

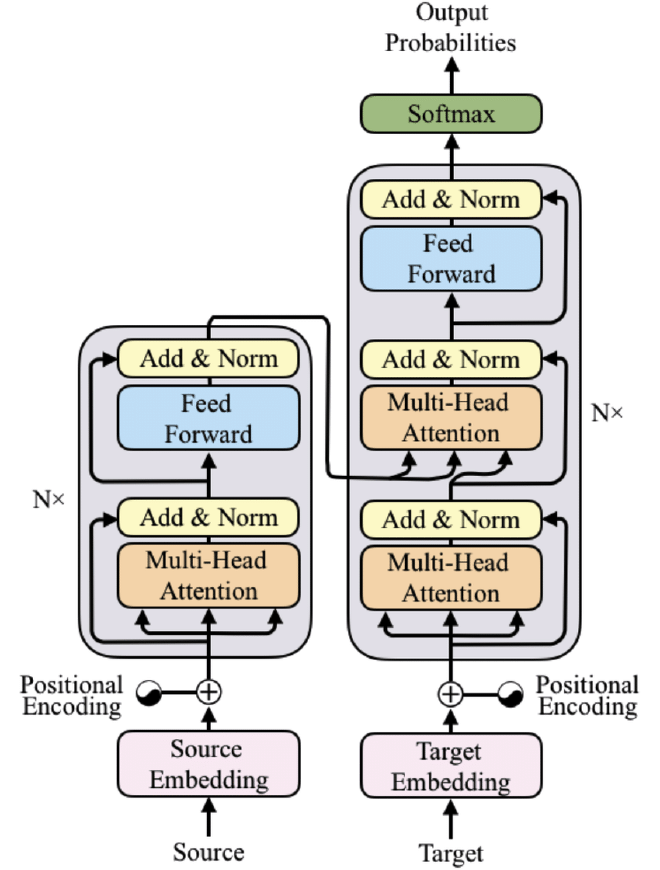

Similarly to my neural networks from scratch project I developed a from‑scratch exploration of the Transformer architecture in PyTorch, focused on understanding self‑attention, multi‑head attention, and Transformer blocks. Includes a small language model and also a Vision Transformer (ViT) variant! Training/metrics tooling is intentionally minimal to keep the core ideas clear.

Implementation details #

Code on GitHubKey features #

- Self‑attention with causal masking

- Multi‑head attention (configurable heads/emb size/block size)

- Transformer blocks: attention + feedforward + residual + norm

- Tiny language model with token/positional embeddings and

generate - Vision Transformer (ViT) model added in

models.py(training TODO) - Minimal, educational training script for a small text dataset

File structure #

self_attention.py— attention head, multi‑head, feedforward, block (+ examples)models.py—SmallLanguageModel(LM head, generate), plus a ViT implementationtokenizers.py—BaseTokenizer,SimpleTokenizer(char‑level)dataset/text_dataset.py—TextDataset, train/val split, batchingtrain.py— dataset/tokenizer wiring, model init, loop, text generationdataset/input.txt— raw text used for trainingexample_output.txt— sample LM generations.gitignore— housekeeping

Requirements #

- Python 3.8+

- PyTorch 1.10+

- tqdm

Install:

pip install torch tqdm

Training setup #

Prepare data

Place your text indataset/input.txt. TheSimpleTokenizerbuilds a vocab and tokenizes characters.Configure and train

Edit hyperparameters intrain.py(BATCH_SIZE,BLOCK_SIZE,LEARNING_RATE, etc.), then:

python train.py

- Generate text

After training, use the script’s generation call or the model’sgeneratemethod to sample text.

Usage #

Test core components:

python self_attention.py

Train the Transformer LM:

python train.py

Output #

self_attention.pyprints tensor shapes to validate attention/blockstrain.pyprints training/validation loss and emits generated samples- Example generations saved to

example_output.txt

Notes and roadmap #

- The repo prioritizes architectural clarity over training bells/whistles

- TODO:

- Add configs, logging, checkpointing

- Train the ViT on a tiny dataset (e.g., MNIST) as a proof of concept

- Add metrics/plots and save/load utilities

References #

- Vaswani et al., “Attention Is All You Need” — https://arxiv.org/abs/1706.0372